Customer satisfaction (CSAT) surveys: A complete guide with 60 questions

Sneha Arunachalam .

Jan 2026 .

Customers won’t always complain, they’ll just leave.

A CSAT survey helps you catch dissatisfaction before it turns into churn by showing exactly how customers feel at critical moments.

In this guide, you’ll learn how CSAT surveys work, how to calculate and benchmark your score, and how to ask the right questions.

You’ll also get 60 proven CSAT survey questions and practical tips to turn feedback into real, visible improvements. customers those who rate you a 4 or 5 and how to turn feedback into real improvements.

What is a CSAT survey and why it matters

Think of a CSAT survey as your customers' report card on your business. It measures how satisfied they are with your products, services, or specific interactions, capturing their honest feelings at key moments.

Understanding the CSAT metric

CSAT works pretty simply. You ask customers one straightforward question:

"How would you rate your overall satisfaction with the [goods/service] you received?"

They pick a number on a 1-5 scale, from "Very unsatisfied" to "Very satisfied."

Here's how you calculate your score: take the number of satisfied customers (those who gave you a 4 or 5) and divide by your total responses, then multiply by 100.

So if 80 out of 100 customers rated you a 4 or 5, you'd have an 80% CSAT score.

Most industries see scores between the mid-60s to mid-70s — anything below 40% is considered pretty rough.

A good CSAT score typically starts around 75-85%. But here's the thing: it varies by industry. Hospitality and banking often hit 79-82%, while insurance usually sits between 70-76%.

Check your score instantly with our CSAT calculator and see how customers really feel.

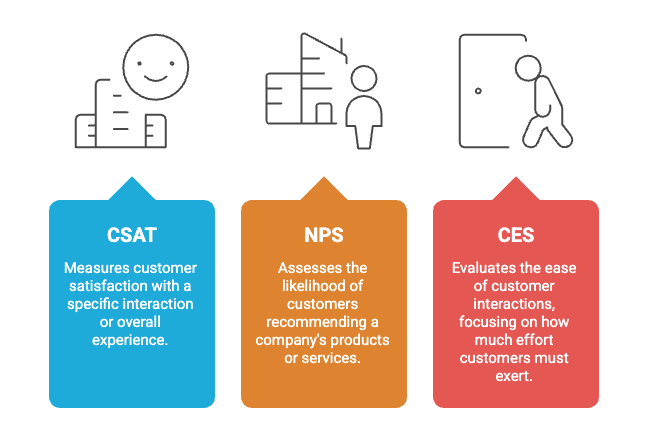

How CSAT differs from NPS and CES

You've probably heard about other customer metrics floating around. Here's how they stack up:

- CSAT zeroes in on satisfaction with specific experiences — it captures those immediate reactions and emotions. It's asking "How satisfied were you?" and focuses on what just happened.

- NPS takes a different angle by asking "How likely are you to recommend us?" Unlike CSAT's snapshot approach, NPS measures your overall relationship with customers.

- CES looks at effort. How hard did customers have to work to solve problems or complete tasks? Lower effort usually means higher satisfaction.

These metrics work together rather than competing with each other. CSAT gives you immediate feedback, NPS shows long-term loyalty, and CES reveals where customers hit roadblocks. Combined, they paint the full picture of your customer experience.

Check out our CSAT vs NPS vs CES guide for a detailed breakdown.

Why CSAT matters for your business

CSAT surveys directly impact your bottom line in ways that might surprise you.

They give you real-time insights into customer perceptions, so you can fix issues before they turn into lasting negative impressions.

This matters because 89% of customer experience professionals believe poor experiences directly contribute to churn.

CSAT also helps you pinpoint exactly where improvements are needed.

Since you can customize questions for different touchpoints, product features, support interactions, checkout process, you get targeted feedback that tells you precisely what to fix.

Here's something encouraging: after positive experiences, 91% of customers will recommend your company. That's free marketing right there.

But the real magic happens when customers see you're not just collecting feedback, you're actually doing something with it. When they notice changes based on their suggestions, you build trust and loyalty.

That emotional connection translates directly into higher customer lifetime value and stronger relationships that weather the occasional bumps along the way.

Acting on feedback while the experience is still fresh

CSAT works best when feedback is collected at the right moment, immediately after an interaction, while the experience is still top of mind. Delayed surveys often miss context, emotion, and accuracy.

That’s why modern support teams trigger CSAT surveys right after a conversation is resolved, not days later.

When feedback is captured in real time, it reflects the actual experience and makes patterns easier to spot across agents, channels, and issue types.

SparrowDesk is designed around this idea. CSAT surveys are automatically sent the moment a conversation closes, ensuring teams get timely, contextual feedback and can act on it before small issues turn into churn.

Turn CSAT feedback into better customer experiences with SparrowDesk.

How to plan your CSAT survey effectively

Think of it like this: sending out a CSAT survey without a plan is like asking "How was your day?" to someone rushing through an airport. You might get an answer, but it won't tell you much.

Too many surveys fail because companies just throw questions together and hope for the best.

We totally get the pressure to start collecting feedback quickly, but a few minutes of planning upfront saves you from months of confusing data.

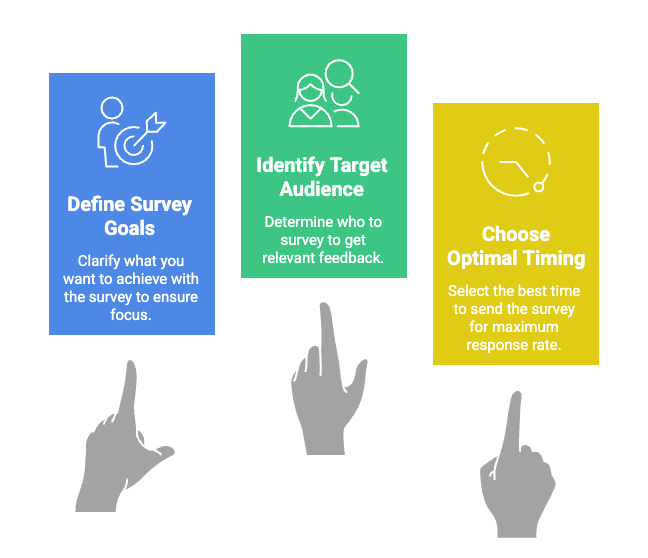

Define your survey goals

Here's the thing, you need to know exactly what you're trying to learn before you ask a single question. Your survey goals will guide everything from question selection to timing and audience targeting.

Effective CSAT survey goals might include:

- Measuring satisfaction with a specific product feature

- Evaluating customer service interactions

- Understanding your overall brand perception

- Improving specific KPIs like cart abandonment rates

- Gathering feedback on recent changes or updates

Once you've nailed down your main objective, make sure every question actually supports that goal.

This focused approach prevents you from creating a random collection of questions that just confuse everyone. You'll end up with feedback that actually helps you make decisions instead of just giving you more data to sort through.

Identify the right audience

Surveying everyone sounds smart until you realize it's not. The right people giving you feedback matters way more than the number of responses you get.

Consider targeting customers who have:

- Recently contacted customer support

- Completed a purchase within a defined timeframe

- Reached specific milestones in their customer journey

- Experienced new features or product changes

- Demonstrated consistent engagement with your product

Something else to keep in mind: B2B customers mostly use desktops during work hours, while B2C customers respond on mobile throughout the day. These patterns affect when and how you design your surveys.

For reliable results, aim for at least 200-300 responses.

And here's a pro tip — include screening questions to make sure respondents actually qualify for your survey. It saves time for everyone.

Choose the right time to send the survey

Timing can make or break your response rates. You want to catch customers while their experience is still fresh in their minds.

Send surveys right after these key moments:

- Closing a support ticket

- Completing a scheduled appointment

- Finishing the onboarding process

- Making a purchase (give them time to use the product first)

- Two to three months before renewal

Research shows Mondays generate about 13% more responses for customer surveys. For B2B audiences, Tuesdays and Thursdays work well too, especially before 10am or around 2pm.

Don't forget about time zones, local holidays, and cultural differences when planning your sends. Response rates usually peak between 9-11am and 1-3pm during normal workdays.

One last thing, if you're measuring satisfaction with a specific product, give customers enough time to actually experience it before asking for feedback. Premature surveys just give you incomplete answers.

Get these three pieces right — clear goals, the right audience, and smart timing — and you'll have surveys that actually tell you something useful instead of just generating more work.

Make perfect timing effortless

Knowing when to send a CSAT survey is one thing.

Making sure it actually goes out at the right moment, every single time, is another.

This is where most teams struggle. Surveys get delayed, sent in batches, or triggered manually — which means missed context and lower response rates. The result is feedback that’s late, incomplete, or disconnected from the real experience.

SparrowDesk removes that friction. CSAT surveys are automatically triggered at the exact moments that matter — right after a ticket is closed, an interaction ends, or a workflow completes.

No manual follow-ups. No guessing on timing. Just consistent, well-timed feedback while the experience is still fresh.

That way, your team focuses on improving customer experience, not managing survey logistics.

See how SparrowDesk automates perfectly timed CSAT surveys

How to design a CSAT survey that gets responses

Here's the thing about CSAT surveys — you can have the best intentions, but if your survey design stinks, customers won't bother responding. And when they do respond, the feedback might be pretty useless.

Think of it like this: if your survey is your chance to have a conversation with customers, you want that conversation to feel natural and worth their time. Most customers are filling these out on their phones while doing ten other things, so every word matters.

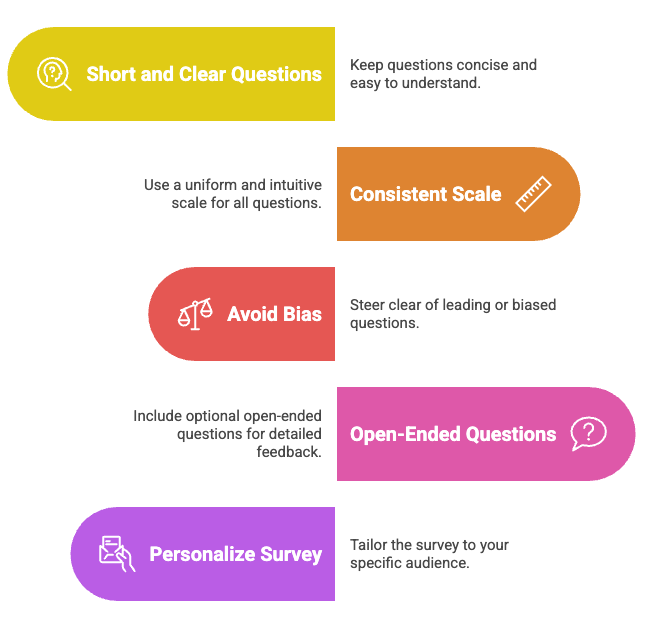

1. Keep questions short and clear

Your customers aren't going to sit there decoding complicated survey questions. They're probably checking your survey between meetings or while waiting in line somewhere.

Here's what works:

- One main question (your CSAT rating)

- Maybe one follow-up question for context

- Language that a middle schooler could understand

We totally get the temptation to ask everything at once, but resist it. The moment customers see a wall of text or confusing jargon, they're out. Your survey should feel more like a quick check-in than a final exam.

2. Use a consistent and intuitive scale

Nothing frustrates customers more than trying to figure out what your rating scale actually means. Does 1 mean good or bad? What's the difference between a 3 and a 4?

Your scale options are pretty straightforward:

- Simple emoji faces (happy, neutral, sad)

- Thumbs up/thumbs down

- 1-5 or 1-10 number scales

Pick one and stick with it throughout your entire survey. Some smart folks actually recommend skipping the neutral middle option entirely — it forces customers to actually pick a side instead of playing it safe.

3. Avoid leading or biased questions

This is where a lot of surveys go wrong without anyone realizing it. You might accidentally be pushing customers toward the answers you want to hear.

Instead of asking "We love hearing about great experiences. How would you rate your experience?" just ask "How would you rate your experience?". See the difference? The first version practically begs for a positive response.

Same goes for cramming two questions into one. Don't ask "How satisfied were you with our product quality and customer service?" because that's really two separate things. Keep it simple and focused.

4. Include optional open-ended questions

Numbers tell you what happened, but they don't tell you why. That's where one good open-ended question saves the day.

Try something like "What's the main reason for your rating?" right after your CSAT question. You can even customize these based on how they rated you:

Happy customers (4-5): "What did you like most?" Neutral customers (3): "What would have made this better?" Unhappy customers (1-2): "What went wrong?"

Just don't go overboard — one open-ended question is usually plenty. More than that and you'll lose people.

5. Personalize the survey for your audience

A generic survey feels like junk mail. A personalized one feels like you actually care about the specific person filling it out.

Quick wins include:

- Using your company colors and logo so they know it's really from you

- Addressing customers by name when you can

- Matching the tone to your brand (formal vs. casual)

- Adding emoji-style buttons that people actually want to click

But here's what really matters — make sure your questions fit your business. "How satisfied are you with the product so far?" works great for product feedback. "How would you rate your experience with our support team?" is better for service interactions. Small changes like this make customers feel like you understand what they just experienced.

Designing CSAT surveys is easier when the tool gets out of the way

Knowing how to design a great CSAT survey is one thing. Making sure it’s consistently delivered, well-timed, and easy for customers to respond to is where most teams hit friction.

This is exactly where SparrowDesk helps.

It lets teams create simple, on-brand CSAT surveys that follow best practices by default, one clear rating question, optional follow-ups, and intuitive scales that work well on mobile.

Surveys are sent automatically right after a support conversation ends, so customers respond while the experience is still fresh.

Because CSAT responses are tied directly to the original conversation, teams don’t just see scores, they see context.

That makes it easier to understand why customers feel the way they do and act on feedback quickly, instead of guessing or digging through reports.

60 CSAT survey questions you can use

Getting good CSAT feedback comes down to asking the right questions at the right moments. Here are 60 CSAT survey questions that actually work, organized by category so you can grab exactly what you need.

Product satisfaction questions

These questions dig into how customers really feel about your product or service:

1. On a scale of 1 to 5, how satisfied are you with our product/service?

2. How well does our product meet your needs?

3. Which features of our product do you find most valuable?

4. What specific features are missing from our product?

5. How would you rate the performance and speed of our product?

6. Have you encountered any issues or bugs while using our product?

7. How would you rate the user-friendliness of our product's interface?

8. If you could change one thing about our product, what would it be?

Customer service experience questions

Use these to measure satisfaction with your support team:

9. How would you rate the support you received?

10. How responsive have we been to your questions or concerns?

11. How would you rate the availability of our customer service channels?

12. Were you satisfied with the resolution of your issue?

13. How would you describe your experience with our support agents?

14. How much personal effort did you have to put forth to handle your request?

15. Did this effort compare to your expectations?

16. To what extent do you agree: "The company made it easy for me to handle my issue"

Website or app usability questions

These questions focus on your digital experience:

17. How easy was it to navigate our website?

18. Were you able to find the information you were looking for?

19. How would you rate the loading speed of our website?

20. Did you experience any technical issues while browsing?

21. Was it easy to complete your intended task on our website/app?

22. How responsive was the website to your clicks and interactions?

23. Did you notice any broken links or missing images?

24. How would you rate our mobile experience compared to desktop?

Post-purchase experience questions

Perfect for capturing feedback right after customers buy from you:

25. How satisfied are you with your purchase today?

26. Does the product's price align with your expectations for quality and value?

27. What problem does this product help you solve?

28. How often will you use the product you purchased?

29. How likely are you to buy again from us?

30. Based on its price, would you recommend this product to a friend?

31. What's one thing that would improve our product or service?

32. What would you say to someone who asked about us?

Delivery and logistics questions

If you ship physical products, these questions cover the fulfillment experience:

33. Were you satisfied with the speed of our product delivery?

34. How would you rate your delivery experience based on order accuracy?

35. Was the product delivered to you in appropriate condition?

36. Was the fragile product properly packaged to prevent breaking?

37. How would you rate the conduct of our delivery staff?

38. Did you face any issues like breaking or leakage in the product?

39. Was the food delivered to you fresh and hot? (for food delivery)

40. How would you rate your overall delivery experience?

General brand perception questions

Want to understand how customers see your brand overall? Try these:

41. How does our brand make you feel?

42. Which words best describe our brand?

43. How would you describe our brand to a friend?

44. How would you describe your level of attachment to our brand?

45. What three words best describe your feelings toward our brand?

46. How familiar are you with our brand?

47. Have your feelings toward our brand changed in the last year?

48. Compared to competitors, is our product quality better, worse, or about the same?

Open-ended follow-up questions

These questions get customers talking and give you the real story behind their ratings:

49. What's the main reason for your score?

50. What could we improve about our product/service?

51. What did you like most about your experience?

52. Is there anything else you'd like us to know?

53. What would make your experience better?

54. What product feature isn't working for you and why?

55. What product should we release next?

56. What other brands do you like to buy from?

Demographic and segmentation questions

Use these to understand different customer segments better:

57. How many employees does your company have?

58. What industry are you in?

59.How long have you been using our product?

60. How frequently do you use our product?

61. Which of these features do you use most often?

62. What is your age?

63. What is your annual household income?

64. Where are you located?

Best ways to distribute your customer satisfaction (CSAT) survey

You can create the perfect CSAT survey, but if it never reaches your customers, it's useless. The best approach depends on where your customers actually spend their time and how they prefer to engage with you.

Email surveys

Email surveys are still popular, and for good reason. They give you flexibility to reach customers after meaningful interactions while allowing time for thoughtful responses.

To make email surveys work:

- Send from verified addresses to avoid spam filters

- Time your emails strategically (mid-morning on weekdays works best)

- Keep subject lines clear and compelling

- Ensure mobile optimization (many recipients will view on phones)

The big advantage? You can track engagement over time, down to individual contact levels. But here's the problem — by the time that survey hits someone's inbox, the moment might have passed. The feedback becomes colder, disconnected from the actual experience you're trying to measure.

In-app or in-product surveys

This is where things get interesting. In-app CSAT surveys solve the timing problem by catching customers right when the experience is happening. These brief forms pop up directly in your app without pulling users away from what they're doing.

Why in-app surveys work so well:

- Higher response rates because you're meeting users where they already are

- Clearer insights with feedback tied directly to specific actions

- Less friction — users can respond with a quick click

- More accurate data capturing the emotional truth in the moment

The key is triggering these surveys after meaningful interactions — support resolutions, onboarding completion, feature usage, or transactions. When experiences are still fresh, that's when you get the most honest feedback.

SMS and text-based surveys

Want to talk about reach? Text messaging gets a 98% open rate compared to email's measly 20%. That makes SMS incredibly valuable for time-sensitive feedback.

SMS surveys work because:

- Most people check text messages constantly throughout the day

- Recipients can respond right within their messaging app

- The format naturally encourages brief, immediate feedback

Keep it short though. Character limitations mean you need a clear numerical question followed by a tailored follow-up based on their response. Send surveys right after conversations end or schedule them for key moments like post-delivery.

Social media polls

Social media opens up your feedback to a wider audience — not just existing customers, but potential ones too. This works particularly well for broader brand perception questions.

The benefits are obvious:

- Free access to followers who already support your brand

- You can target specific audiences through hashtags or paid promotions

- Higher visibility through shares and engagement

The downside? Character limitations force you to keep things extremely concise. Consider adding links to more detailed surveys for people who want to give additional feedback.

Using multiple channels together

Here's what successful companies do — they don't rely on just one distribution method. This approach helps you reach different segments of your customer base while gathering feedback at various journey stages.

Think about matching channels to specific touchpoints:

- Email for post-purchase or quarterly relationship feedback

- In-app surveys for feature or product experience feedback

- SMS for immediate post-interaction responses

- Social media for brand perception insights

When you use the right channel for each touchpoint, you'll collect feedback that actually makes sense in context. This multi-channel strategy lets you meet customers wherever they prefer to engage, giving you a complete picture of satisfaction across your entire customer experience.

Bringing multi-channel CSAT distribution together

Choosing the right channels is important. Managing all of them manually is where things usually fall apart.

Teams end up sending surveys late, inconsistently, or without context, which leads to lower response rates and feedback that’s hard to act on.

The real challenge isn’t distribution itself. It’s coordinating timing, channels, and follow-ups without creating more operational work.

SparrowDesk brings CSAT distribution into the same place where customer conversations already live.

Send CSAT surveys at the right time, on the right channel, with SparrowDesk

Surveys can be triggered automatically after support resolutions and key touchpoints, ensuring feedback goes out through the right channel at the right moment without manual effort.

Because responses are tied directly to conversations, teams get clear, contextual insights across channels instead of scattered data points.

The result is higher-quality feedback and a complete view of customer satisfaction across the entire support journey.

How to analyze and act on CSAT results

Getting survey responses is just step one. The real magic happens when you dig into that data and actually do something with it.

Here's the thing: most companies only hear from 4% of dissatisfied customers, while the other 96% just disappear without saying a word.

That makes analyzing your CSAT results absolutely critical for keeping customers around.

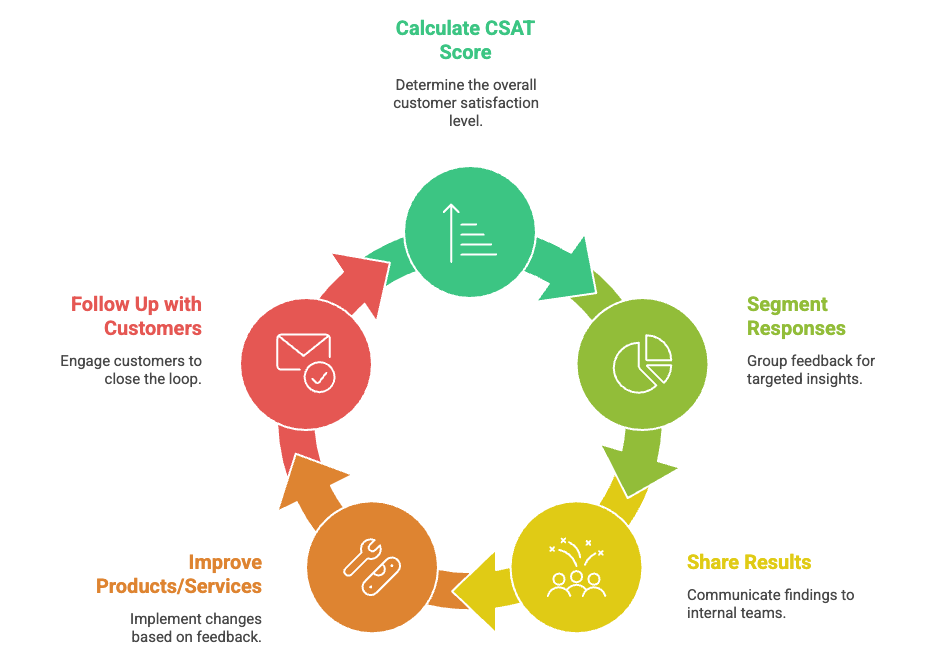

Step 1: Calculate your CSAT score

The standard way to calculate CSAT focuses on your happy customers — those who rate you 4 or 5 on a five-point scale, since they're the ones most likely to stick around. The formula couldn't be simpler:

(Number of satisfied customers ÷ Total survey responses) × 100 = % of satisfied customers

So if 225 out of 300 people rated you a 4 or 5, you're sitting at 75%. You can also calculate an average by adding all ratings and dividing by total responses. Most industries see scores between 75-85%, with anything above 80% being excellent.

Step 2: Segment responses for deeper insights

Your overall score tells part of the story, but breaking things down by segments reveals where the real issues live:

- Customer types: Are new customers happier than long-timers?

- Touchpoints: Which channels are creating the most frustration?

- Product areas: What features keep generating support tickets?

- Issue types: Which problems tank satisfaction the most?

This breakdown helps you focus your energy where it'll make the biggest difference. Track these segments over time and you'll spot trends before they become bigger problems.

Step 3: Share results with internal teams

Don't let CSAT data sit in the customer service silo. Smart companies spread this stuff around by:

- Building dashboards that show engagement levels and actual comments

- Setting up a Slack channel for real-time CSAT updates

- Running regular meetings where teams review results together

- Sending company-wide summaries of key findings

When everyone sees the same customer feedback, problems get fixed faster — nobody's left guessing what customers actually think.

Step 4: Use feedback to improve products and services

Here's where analysis turns into action:

- Spot your "top 3 CSAT killers" each quarter

- Figure out how much they're costing you in satisfaction and churn

- Come up with specific fixes — process tweaks, product updates, policy changes

- Make those changes happen

- Track CSAT in those areas to see if things improve

This approach ensures you're fixing the stuff that actually matters to customers. If your CSAT scores aren't driving real changes, you're missing the whole point.

Step 5: Follow up with customers to close the loop

Want to turn feedback into loyalty? Show customers their voice matters:

- Thank everyone who responds, regardless of their rating

- Reach out to unhappy customers right away to fix their issues

- When you make improvements based on feedback, let people know

Customers whose problems get addressed often become more loyal than those who never had issues in the first place.

Even better — 81% of customers say they'd give more feedback if they knew they'd get a quick response. That's how you turn survey data into real relationships.

Here's what matters most

You've got everything you need to start getting real feedback from your customers. The 60 questions we covered give you options for every situation, whether you're checking on product satisfaction, support interactions, or overall brand feelings.

But here's the thing: surveys only work if you actually do something with the responses. We totally get that it's tempting to just collect data and call it done. That won't help anyone.

The magic happens when you close the loop. Thank people for taking the time to respond. Fix the problems they point out. Let them know when you've made changes because of their feedback. Customers who see you actually listening? They stick around.

Start wherever feels right for your business. Maybe that's one simple post-purchase survey, or checking in after support tickets get resolved. You don't need to launch everything at once.

Keep an eye on your scores, sure, but focus more on the trends. A 70% CSAT that's climbing beats an 80% that's dropping every time.

Your customers are already forming opinions about your business. The question is whether you're going to find out what those opinions actually are — and do something about them.

Turn CSAT feedback into action automatically

Listening to customers is powerful. Acting on their feedback, consistently and at scale, is where most teams struggle.

SparrowDesk is built to close the loop for you. CSAT surveys are sent automatically right after support conversations end, while the experience is still fresh.

Feedback is tied directly to each ticket, giving your team the full context behind every score not just numbers in a dashboard.

Turn customer feedback into better experiences with SparrowDesk

That makes it easy to spot patterns, follow up with unhappy customers, and reinforce what’s working with happy ones. Instead of collecting feedback and hoping someone acts on it, SparrowDesk helps teams turn CSAT into real improvements that customers actually notice.

Key takeaways

CSAT surveys are essential for business success, as 73% of customers will switch after multiple bad experiences. Here's what you need to know to create effective customer satisfaction surveys that drive real improvements:

• Keep surveys short and focused - Limit to 10 questions maximum with one primary CSAT rating question and optional follow-ups to maximize completion rates

• Time your surveys strategically - Send immediately after key touchpoints while experiences are fresh, with Mondays showing 13% higher response rates

• Use multiple distribution channels - Combine in-app surveys (highest response rates), email, SMS (98% open rates), and social media to reach customers where they engage

• Aim for CSAT scores above 75% - Calculate using satisfied customers (4-5 ratings) divided by total responses, with 80%+ considered excellent performance

• Close the feedback loop - Thank all respondents, address low scores immediately, and implement visible changes based on feedback to build customer loyalty

• Segment your data for actionable insights - Break down results by customer type, touchpoints, and product areas to identify specific improvement opportunities

The key to successful CSAT programs isn't just collecting data—it's acting on feedback quickly and showing customers their voices matter through tangible improvements.

Frequently Asked Questions

MORE LIKE THIS

Support made easy. So your team can breathe.